Why I’m Gradually Retiring from Teaching Live Online Classes

I really love Mexico. I love the people, the vibe, the food, the tequila, the beaches, you name it. If I had to pick just one place that’s my happiest…

Musicar Upgrades for My Jaguar XKR-S

When I got my Jaguar XKR-S, one of my favorite things about it was the engine roar. It’s delightful, guttural, magical. However, when I was just cruising and I rolled…

My Favorite One-Week Summer Itinerary for Iceland

A friend of mine came over to Iceland for a week, so I planned a road trip. This road trip does require an off-road-capable vehicle, like a Dacia Duster or…

Sold the Land Rover Defender.

Our adventure in Iceland is coming to an end, and we’re moving back to California at the end of this month. We can’t bring our Defender with us – the…

Reminding Myself What Life Was Like During COVID19: Day 501

It’s been a while since I’ve written one of these, and as I watched one cruise ship pull out of Húsavík, Iceland and another cruise ship pull in to take…

Doug DeMuro Reviewed My Jaguar XKR-S

Doug DeMuro is a YouTuber who reviews cars in a different way: he doesn’t focus on their 0-60 times or their gas mileage, but rather their quirks & features. He…

Iceland Tourist Tips

We’ve been living in Iceland for a few months now. I don’t wanna rehash the stuff you’ll find in typical tourist advice, but rather talk about stuff that I’d wanna…

Epic Life Quest: Level 15 Completed.

Life’s little moments of success can pass us by so easily. If we don’t track the things we’re proud of, we lose track of how far we’ve come. Steve Kamb’s Epic Quest of…

Things I Love About Teleworking in Iceland

We moved to Iceland in January to work remotely, and there’s a lot about it that feels like we’re living in the future. It’s an easy transition for Americans. Cars…

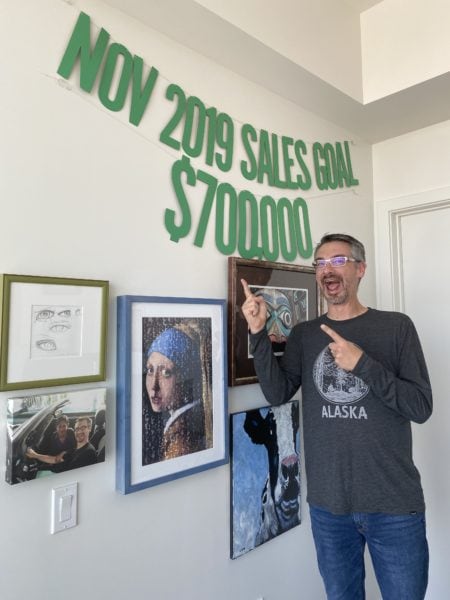

How the Company-Startup Thing Worked Out For Me, Year 9

It’s time for my annual update on the wild ride. If you want to catch up, check out past posts in the Brent Ozar Unlimited tag. This post covers year…

Hi, I’m Brent Ozar. I live in Las Vegas, Nevada. I’m on an epic life quest to have fun and make a difference.

Some of my projects include Brent Ozar Unlimited, PollGab, and Smart Postgres.

I love teaching, travel, laughing, and collecting vintage sports cars. You can follow me on Instagram, Threads, TikTok, Bsky, Facebook, LinkedIn, Github, whatever.