Yesterday, I blogged what I did in a random work day per Steve Jones’ suggestion. And yesterday, I said I’d blog today as well because it was going to be a different schedule.

You shouldn’t read this. It’s useless.

It’s useless because as I look back on it, I didn’t accomplish much of anything on Wednesday, July 18th. That’s not rare – there are plenty of days where I feel like I don’t make enough progress. Thing is, Steve wanted us to share the tools we’re using and what problems we’re solving, and this post is pretty well devoid of that information because I didn’t solve problems yesterday.

I didn’t know that when I started the day, obviously.

I was going to delete this, but screw it – it’s already written, so presented for your amusement: a day when I don’t solve any big valuable problems, just tread productivity water:

4:15AM-4:30 – Emails. A quick round of emails before showering and going out for coffee.

5:15-6:00 – Reading blogs. Not a lot of new stuff posted yesterday. I answer a DBA.StackExchange.com question about setting up a readable replica and one about cross-database queries in Azure SQL DB. I refresh the tracking page for my new MacBook Pro – it’s made it from Shanghai to Chicago. C’mon, little buddy. (Sitting)

6:00-7:30 – Learning about Lambda event sources and limits. Looking at my calendar, today is an appointment-free focus day that I’ve blocked out for design work on SQL ConstantCare®’s index and query recommendations. With that in mind, I wanna think about how we do that processing. So far, most of the data is hosted in AWS Aurora PostgreSQL, and the application code runs in AWS Lambda using the Serverless framework. However, as we start to analyze query plans, I don’t want those big XML plans stored in a relational database, nor do I want the advice stored there – the relational database is just too much of a bottleneck if we wanna process a lot of plans fast. To scale the new process, could we:

- Store each incoming execution plan as a file in S3

- Have the new file trigger an AWS Lambda function to analyze that plan and make recommendations (and will the function finish in time, given the 300-second limit per function)

- Store the recommendations somewhere other than a database (say, in DynamoDB or in another S3 file)

To pull that off, I need to think about whether the plan analysis functions will need any data from the database (like data about the client’s server, or rule configuration data). I don’t wanna do the architecture design, mind you – Richie’s the architect here – but I just wanna learn enough to have a good discussion with him about how we do it. I finish up my learning by searching for related terms, plus phrases like “problem” or “bug” to see issues people are running into, like this one.

7:30-8:00 – Emails. A sales prospect from yesterday signed their contract, so did some logistics with that. A training class student sent in DMV data about their SQL Server, so analyzed their plan cache and explained the issue they’re facing. FedEx emails to say my new laptop is on the truck for delivery by 10:30AM today. Woohoo!

8:00-8:30 – Break. Make a pot of coffee, down a yogurt, start the laundry.

8:30-9:00 – Emails. More sales inquiries, and an attendee question from my user group presentation last night.

9:00-10:00- Postgres query writing. In our development environment, Richie’s already got ConstantCare.exe sending in index and query plan data. I open up Postico – it’s a Postgres client for the Mac, like the equivalent of SSMS – and start spelunking around in the tables.

This takes a second to wrap your head around, but:

- ConstantCare.exe runs on the clients’ machines

- It queries the clients’ SQL Servers, getting data from DMVs

- ConstantCare.exe exports that data to JSON format, and uploads it to the cloud

- In the cloud, AWS Lambda functions import those JSON files, and insert them into a database (AWS Aurora Postgres)

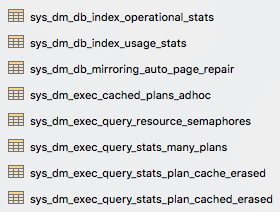

So I’m querying SQL Server DMVs, but in Postgres, and with ever-so-slightly different names (note the underscores in tables rather than sys.dm_db_index_usage_stats. At first glance, you might think, “Ah, so he’s just editing sp_BlitzIndex so it works off these new table names!” But no, we’re starting again from scratch up in the cloud because up here, we have the benefit of historical data.

For example, the first easy query I start with is warning folks when they have a heap with forwarded fetches, something sp_BlitzIndex already alerts you about. However, in the cloud, I wanna compare today’s forwarded fetches to yesterday’s. You might have forwarded fetches reported on a table that isn’t in active use anymore, but SQL Server is still reporting forwarded fetches since startup. No sense in pestering them about something that isn’t a problem.

10:00-10:30 – Breakfast, and the new laptop arrives. Erika’s up, makes eggs. It takes a special relationship to be able to live together, work together from home, and even work for the same company. We’re pretty much around each other 24/7, and it works. If she passes away before I do, I’m gonna be single for the rest of my life.

Unpack the new laptop, put on the new protective case, boot it up, and point it to my Time Machine backups on the network. Apple makes it delightfully easy to buy a new machine. (We’ll see how the Core i9 throttling issue goes, although I rarely run my laptop at 100% CPU for 20-30 minutes as shown in the video.)

Woohoo! New laptop arrived, going straight into a protective case. pic.twitter.com/7hD2UgAJic

— Brent Ozar (@BrentO) July 18, 2018

10:30-11:00 – Communication. I tweet a photo of the new laptop, which leads to some discussions on Twitter and LinkedIn, and then did some emails.

11:00-11:45 – Office Hours podcast. Every Wednesday, we get together in GoToWebinar, take live questions, and fumble our way through answers. This podcast would take a lot more out of my calendar were it not for the wizardry of DigitalFreedomProductions.com. They record it, upload it to our YouTube channel, make a podcast out of it, transcribe the audio, and create a blog post with the transcription.

11:45-12:15 – Emails. Customer emails, contract negotiation with a prospect.

12:15-1:30 – Lunch. Erika and I head to Wishbone where we both get the same thing every single time: blackened chicken with sides of spinach and red beans (no rice.) We started doing Weight Watchers quite a while back, and I’ve just about hit my target weight (185), but that food is good enough (and low points enough) that I’d eat it even if I wasn’t watching my delicate figure.

1:30-2:00 – Erik’s new book arrives. All productivity stops. So much for this being a focus day.

2:00-3:30 – Back to Postgres query writing. Building my first proof-of-concept for the rebuild-your-heaps script. This is the first index analysis script we’ve done in Postgres, and the first one is always the hardest, putting together the right joins between the right tables, comparing to yesterday’s forwarded fetches, and looking for edge cases that would break it. I’m by no means done – I’ll need to keep going on this tomorrow, but once I’ve got the first index analysis script done, the next one will be a lot easier.

3:30-4:00 – Emails. Signing an NDA for a prospective client, answering a few mentoring questions.

4:00PM – Done. Calling it a day. Not unusual for me – I tend to get a lot done in the mornings, and then coast to a stop in the afternoons. I didn’t make as much progress as I’d like today, but that’s life. Thankfully, I have the next 2 days on my calendar blocked out for the same task, and I’ll likely do better tomorrow. I won’t blog my day again tomorrow though – I’ll hold off for a few days until next week when I’m working with a client. That’ll show a different kind of work day.

5:40-5:45 – Update SQLServerUpdates.com. I get notified that Microsoft published 2017 CU9, so hop into the home office to update the site.

So there you have it. I have no idea what you’ll think about that day, but if you enjoyed this (or if you think *I* work a lot), read Jen Stirrup’s Tuesday.

6 Comments. Leave new

Hey Bren! I don’t want to be a “bad news” messenger, but the throttle still occurs without too much time doing 100% workload… just a minute or two at near 100% is enough… temp & power consumption are the triggers of the throttling…

Now, about Erik’s book, it’s really great… I’ve purchased the Kindle Edition, and I will probably buy it again for my wife 😀

Luis – thanks! Yeah, that’s okay – I don’t spend that much time near 100%, so that’s not a big deal. (And we keep the house at around 62-63, so that may help me too, hahaha.)

Hi Brent,

You titled this post “A Very Unproductive Day…” but I am going to argue with you about that. Not the title, you can name your posts whatever you like, good sir – the “unproductive”…

In days of old, knights slew dragons. As (mostly-) normal humans – and guys – we love the fight scene. Let’s face it, that’s where all the action is.

Or is it? It turns out there’s a lot that must happen leading up to the fight.

I learned in the Army that six people are required behind the lines to support each fighting troop. Six. What do those six people do? Some drive trucks transporting boxes of stuff from location A to location B. Some cook. Some shuffle administrative paperwork so everyone – fighters and support personnel – get paid. Some wash dishes. It’s a long list. My point is this: You did some good thinking today. “Thinking is the hardest work there is, which is probably the reason why so few engage in it.” – Henry Ford Henry is right.

In a few days, or a few weeks, or a few months, or a few years… you’re going to deliver something (else) that looks to all the world like another awesome overnight success (like SQL ConstantCare®). You’ll know the backstory – as I do – that the path to that success was enabled (yes, *enabled*) by days like this where you pushed the pebble forward. This is a day you will build on, sir. You will build from this spot to reach the next and, truth be told, you cannot get to where you’re going without passing through this day and others like it. In my humble opinion, today you picked a needle and moved it (I read about that somewhere… oh yeah – here: http://ozarme.wpengine.com/2013/03/pick-a-needle-and-move-it/).

So I argue that today was not unproductive at all.

:{>

PS – Nice laptop and cover!

Hahaha, thanks, sir. Well, I kinda define productivity as deliverables – did I deliver something today? Emails don’t usually feel like great deliverables. They do lay groundwork for stuff, but they’re nowhere near as fulfilling as, say, banging out a finished stored procedure. (sigh) Still at work though.

[…] tries to get it burning this morning, I imagine. I start Try #1 with some dead twigs. I comment on Brent’s post about #SQLCareer and fill up the Buffer buffer for the day. I open Outlook and see an email from Kent Bradshaw. I […]

If nothing else, you got me to grab the book.