With the first vendor-based 24 Hours of PASS approaching, it’s a good time to learn how to think critically.

Critical thinking doesn’t mean criticizing – it means seriously examining the evidence and basing your decision based on facts. For example, when reading a vendor benchmark or white paper, it means asking:

- What does the evidence say?

- What evidence is missing?

- How is the evidence being interpreted by the author?

- What’s the author’s bias, and what is my own bias?

- Are we purposely being misled?

- What conclusion should I draw from the evidence?

Let’s do a real-life example together.

Fusion-io’s SQL Server 2014 Performance Tests

Fusion-io (who just got bought by SanDisk) published a SQL Server 2014 performance white paper. (PDF shortcut here) It opens by describing the database and hardware involved:

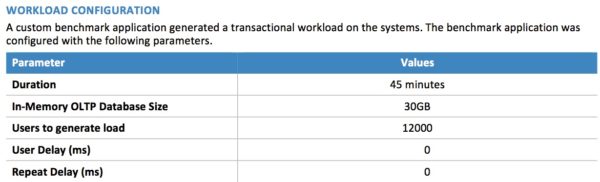

It’s a 30GB database with 12,000 users that continuously query the database – that’s what the 0ms user delay and 0ms repeat delay mean. But what do they really mean? Thinking critically means taking a moment to think about each fact that’s presented in front of you.

Is This Load Test Realistic?

When you or I think about a system with 12,000 people using it, those meatbags take some time between queries. They click on a link, read the page contents, maybe enter some data, and click another link. That’s delay.

Fusion-io is setting up a very strange edge case here – there’s maybe a handful of systems in the world that have this style of access pattern, and the word “users” doesn’t come into play, because human beings aren’t running queries continuously with 0ms waits.

The database size doesn’t make sense either. On real-life systems like this, the database is way, way larger than 30GB.

So already, just from the database and load test description, this gives me pause. It doesn’t mean that the benchmark is wrong – the numbers may be completely valid for this strange edge case – but it gives me pause to question why the benchmark would be set up this way. Possibilities include:

- The load testers were tight on time, and they wanted to set up a worst-case scenario quickly

- The hardware & software only performs well in this strange edge case

- The load testers don’t have real-world experience with this type of load, so they made incorrect assumptions

Do Load Tests Have to Be Realistic?

The best load tests identify a real-world problem, then attempt to solve that problem in the best way. (Best means different things depending on the business goals – sometimes it’s fastest time to market, other times it’s the cheapest solution.) When you’re reading a load test, ideally you want to see the customer’s name, their pain point, how the solution solved that pain, and what load test numbers they got.

This document is not one of those.

And that’s completely okay – not every load test can be the best one. Load testing is really expensive: you need to lock down hardware, plus get staff who are very experienced in storage, SQL Server, and load testing. Even just writing this documentation, producing the graphs, and fact-checking it all is pretty expensive. I wouldn’t be surprised if Fusion-io spent $50k on this document, so they want it to tell the right story, and tell it fast.

The awesome thing about critical thinking is that just by reading the test parameters, we’ve already learned that this is an edge case scenario where Fusion-io is trying to tell a story. They’re not necessarily telling the story of a real world customer – they’re telling the story of SQL Server 2014 features and their own hardware.

Hardware Used for the Fusion-io Load Tests

For this load test, what storage did Fusion-io choose to compare?

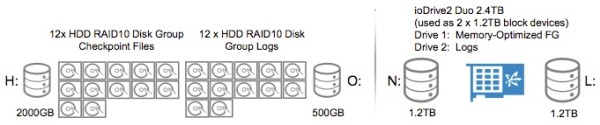

More alarm bells. Say you needed to host a 30GB database – raise your hand if your employer would be willing to give you 24 hard drives or a 2.4TB SSD. Anyone? Anyone? Bueller? Bueller?

Granted, we buy storage for performance, not for capacity, but both sides of this load test are questionable. If I tried to tell my clients to buy a 2.4TB Fusion-io to handle a 30GB database, they’d fire me on the spot – rightfully. This setup just doesn’t make real-world sense.

I know it’s hard to hear me over the alarm bells, but let’s look at the mechanics of the test.

Performance Test: Starting Up the Database

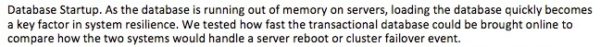

Fusion-io tested database startup of in-memory tables, saying:

Cluster failover – wat? We’re talking about local storage here, not a shared storage cluster. If they’re talking about an AlwaysOn Availability Group where each node gets its own copy of the data, then there’s no database load startup time – the database is already in memory on the replicas.

If every second of uptime counts, you don’t use a single SQL Server with local SSDs.

So now we’re pretty far into alarm bell territory. The test case database is odd, the load test parameters aren’t real-world, the hardware doesn’t make sense, and even the test we picked doesn’t match up with the solution any data professional would recommend. Faster storage simply isn’t the answer for this test.

But let’s put the earplugs in and keep going to see the test results:

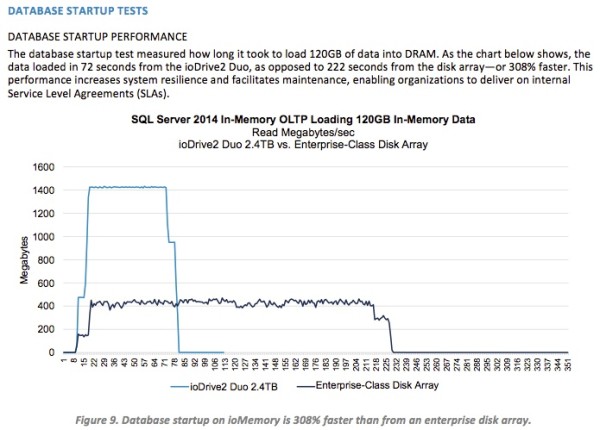

The Fusion-io maxes out at 1400 MB/sec, and spinning rust at 400. Fusion-io wins, right?

Not so fast. Remember that we’re reading critically, and we have to question each fact. What is this “Enterprise-Class Disk Array” and is it really representative of the name on the box?

For reference, 400MB/sec sequential reads is slower than a $100 desktop SSD. It’s also slower than 4Gb fiber, one of the slowest connection methods you can use for an “enterprise-class disk array”. Fusion-io, I served with enterprise-class disk arrays. I knew enterprise-class disk arrays. Enterprise-class disk arrays are a friend of mine. Fusion-io, you did not use an enterprise-class disk array in your tests. (Source quote for us old folks.)

When you’re thinking critically, sometimes it helps to put yourself in the author’s shoes. What is my mission in writing this document? Who’s paying my check? What’s the story I need to deliver? If I purposely wanted to sandbag a storage load test, I’d connect a large number of hard drives (thereby looking fast and expensive) through a very small connection, like antiquated 4Gb fiber. Then I’d leave out that crucial connection detail in the load test setup details. Voila. That way, when we tracked the length of time it took to load data from those drives, it’d look like an artificially long time because we’re trying to drink a lot of data through a tiny straw.

But if the numbers STILL didn’t make conventional storage look bad enough, I’d use a bigger database file so that the time difference seemed more drawn out.

Speaking of which, let’s take a closer look at the first sentence above that chart:

Heeeeey, wait a minute… I thought our in-memory OLTP database was 30GB?

Now, I could stretch the limits of credibility by saying, “Well, the entire database is 120GB, but the in-memory portion is only 30GB. I still need the rest of it in memory to warm up my cache, though.” Unfortunately, that doesn’t hold water because the test says it’s testing “how fast the transactional database could be brought online.” Even if the rest of the database isn’t in memory, it’s still online. The evidence numbers don’t match up to the text.

So Is Fusion-io’s Performance Test Valid?

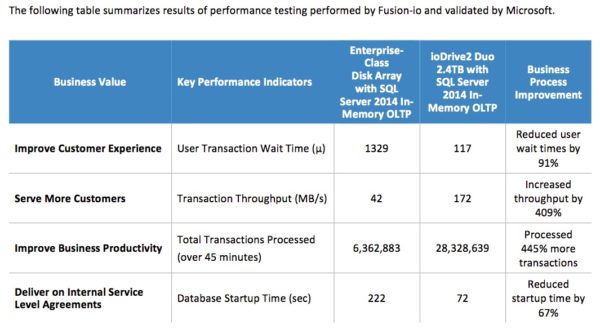

The numbers might be correct, but the real question is how the data is interpreted. As Kendra Little says, performance numbers don’t tell a story – they help you tell a story. Here’s the story Fusion-io tells in the executive summary, and note that the top says it’s been “validated by Microsoft.” Let’s see what story Microsoft validated:

I’ll use these exact numbers to tell a different story:

Improve Customer Experience – note that these metrics are in microseconds. The user transaction wait time on the “enterprise-class disk array” was just 1.3 milliseconds. Most customers in the real world would kill for consistent 1ms storage writes. If your storage is running slower, you could switch over to this (crappy) disk array, get faster throughput, AND have the benefit of failover clustered instances with zero data loss and automatic failover. A single ioDrive, on the other hand, does not give you zero data loss failovers unless you switch to synchronous storage mirroring or database mirroring, and both of those will have higher latencies than the disk array used in the example. If you care about startup – as the test purports that it does – then the winner here is actually the disk array, crappy as it is. I would change my verdict here if the tests included the overhead of synchronous AlwaysOn Availability Groups or synchronous database mirroring in the Fusion-io example.

Serve More Customers – both of these systems got less throughput than the same hardware I tested last year that happened to be using $500 consumer SSDs. I’d be really curious to see how my Dell 720 compared to their Dell 720. (For reference, we had 8TB of usable capacity plus hot spares on the shelf for $8k.)

Improve Business Productivity – business productivity implies that real world users are sitting around waiting for the query to finish, but we’re talking about the difference between a 1.3ms transaction versus a .1ms transaction. If your users really can’t get their job done because their single transaction is taking that extra millisecond, allow me to introduce you to the magical world of batch processing instead of row-by-row singletons.

Deliver on Internal Service Level Agreements – the words “reduced startup time by 67%” are factually incorrect due to the 120GB switcheroo, and they conveniently leave out the cost of an actual system restart: Windows shutdown, BIOS post, Windows startup, and SQL Server startup. In reality, if you need higher SLAs, you use SQL Server 2014’s AlwaysOn Availability Groups so your secondary replica can already be online and ready to go.

Fusion-io’s product might indeed be better than its competition, but this misleading white paper doesn’t give you the real evidence you need to arrive at that conclusion – no matter who validated the paper.

Welcome to Marketing. The Product is You.

Sadly, this is how vendor marketing works today. It’s a race to the bottom with vendors using all kinds of tricky tactics to make their product seem better. Heck, even Microsoft marketing uses this tactic when they claim AlwaysOn Availability Groups give you 100% availability.

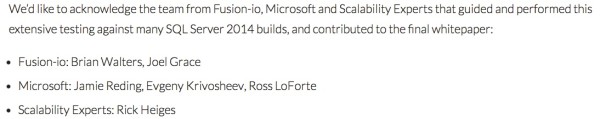

This is why I get so nervous when I see vendors take over community events like the 24 Hours of PASS. The very first session this year is on this topic, co-presented by the very same guys at Microsoft, Fusion-io, and Scalability Experts who built this white paper:

I get nervous when I push Publish on this post because I know these guys aren’t gonna be happy. The reality is that they got paid to write the white paper, and you’d better believe Fusion-io wanted a result that painted their drives in the best possible light. The authors achieved that goal, and they’re not going to be happy that I’m focusing my small flashlight on the contents of this paper. (Sorry, guys, but this is a story that needs to be told.)

But I have to publish this post because I think it’s important for the community to have real journalism. It’s important for us to reflect on what’s happening in the community, to question what we’re being told, and to get to the bottom of the truth. I knew this white paper had questionable evidence when I first saw it, and I let it go, but now that it’s become the anchor session for the 24 Hours of PASS, it’s time to start asking questions about marketing vendors at our community event.

PASS is us. The community is you and me.

When we let a vendor use material like this to teach our junior data professionals during the 24 Hours of PASS, what message are we sending?

This is absolutely, positively not okay with me. Is it okay with you?

20 Comments. Leave new

Wow. This is just shameful. I had Fusion-IO speak at my user group a long time ago, and was impressed by the their technical knowledge and the fact that, at the time, the product practically sold itself due to its performance characteristics.

They don’t need to stoop to this level to sell their product. They should be especially ashamed of presenting it at a technical event (or one that purports to be a technical event, even though this appears to simply be licensing a name).

I’m disappointed in the vendor, in Microsoft for “validating” this test, and in PASS for putting its name on this event which looks to be nothing more than a marketing push.

Joe – thanks for the comment. I’d agree – Fusion-io’s gear does a good job of speaking for itself. It reminds me of how Ferrari treats car magazines.

Thanks for doing this Brent. This is a huge service to the SQL Community.

If you take an venn diagram of people technical enough to be able to evaluate these benchmarks. Of people who care enough to be active in the community. And of people with journalistic leanings and skills enough to write something like this. The intersection of these groups would be a very small group, I’m happy to know it has at least one individual, you.

For me, I can tell the difference between a marketing talk and technical talk (like the 24hop community talks we’re used to). I’m sure many others can too.

I’m like many junior data professionals in that I don’t have all of the skills myself to evaluate some of these technical claims. In these cases, I often come away from the talk/paper with a reserved or undecided approach to what I’ve read.

I think reading your post, I’ve come to realize that evaluating hardware properly takes skills. And these skills need to be developed. And that kind of training doesn’t begin with a vendor-written whitepaper (unless maybe it’s source material for a case study).

Michael – thanks, I really appreciate the kind words.

I get a lot of rocks thrown at me for being too negative, especially for posts like this, but we have to have these kinds of open, frank discussions – especially with the 24 Hours of PASS being based on vendor materials like this.

I’d like to see fusion IO compared with 2 or four SSDs in a RAID 1 or 10. Those few SSDs already should be hard to max out. Price hopefully much cheaper.

Tobi – the trick is that someone has to fund that testing. Like I wrote, a test like this can easily cost $50k, and who’s going to pay the bill for that load testing? The commodity SSD vendors won’t do it because the ROI isn’t there. They might sell a few hundred more SSDs, but there’s not $50k of profit in that.

Our systems team brought in the Fusion IO guys to try to convince the DBA Team that we needed their products. It went basically like this:

Fusion IO Salesman: “We’re awesome.”

Me: “Yes you are. I don’t need that much awesomeness.”

Fusion IO Salesman: “But we’re awesome. And you can be awesome too”

Me: “Nope. Don’t need it. IO isn’t our issue. Crappy code is.”

Salesman: “But don’t you want to be awesome by association?”

Me: “Can it rewrite crappy code? No? Then no thanks.”

Robert – yeah, to some extent, that’s a problem I see often. But they gave YOU, and you can fix that. 😉

Great post Brent! There’s another pain point to this marketing/ad driven benchmarking, which is that department level to C-level (e.g. CIO, CTO) management that you encounter in a performance situation will use it as their expectation. For example, the RAID card or SAN vendor brochure says that you’ll get x tps from MS-SQL using their product, so that’s what you as the DBA have to hit. Never mind that the data model is horrible and the server is so overloaded that you have locking issues that no magical RAID card is going to overcome. Or that the SAN was misconfigured because nobody read deep into the technical documentation to see that default settings shouldn’t be used if the SAN was hosting database files. None of this matters because the managers who requested and got the funds for this hardware did it based on the glossy brochure’s optimistic (or overblown) numbers. Now they have a problem, which means they are looking to make it somebody else’s problem, and when that somebody is you it’s lousy. Of course, I’ve learned more about RAID controllers and SANs than I ever thought I would, so I guess that’s a plus.

Thanks for being someone out there questioning things like this. I also appreciate the tie-in to this 24 Hours of PASS issue. If questioning these things and writing about them is considered “too negative” then some serious amount of Kool-Aid being gulped down out there.

Fantastic post, Brent. Thank you!

Merrill – thanks sir!

Noel – yep, Fusion-io’s marketing is top notch, and they know how to open management wallets. I’m really disappointed that it looks like this message will even get into our community event sessions.

Great post as always!! Keep up the good work.

Straight to the point and precise. Let’s hope Fusion-io and others consider the bad rep they are getting when doing marketing like this and going back to the more narrow and honest path. I do love Fusion-ios products though but that is another story…

Martin – thanks sir. You’re absolutely right – the product can be professional while the marketing is not. It’s good to differentiate between them and reward the company for a good product.

[…] Thinking Critically About FuisionIO’s SQL 2014 Tests – Brent Ozar (Blog|Twitter) […]

These are odd benchmarks, but (as you seem to suggest yourself at some points) I’m not sure if they’re the result of choosing a contrived scenario that makes the FIO cards look better, or just sloppy thinking and inexperience. You know, “Never ascribe to malice that which is adequately explained by incompetence,” and incompetence in IT seems to go up to around the 90th percentile. Either way, I hope posts like this get widespread attention and encourage vendors to conduct better tests in the future. One thing I’d really like to anyone performing benchmarking do is share the raw data and documentation of their methodology.

James – normally I’d agree with you, but the authors of this study are very experienced. Rick Heiges is a former member of the PASS Board of Directors and consults with Scalability Experts. Ross LoForte is a Microsoftie who’s authored books and runs the engagements at the Chicago Microsoft Technology Center. Knowing that, how does it affect your interpretation?

Hi Brent, I hadn’t heard of these guys before, but good point. In that case, your interpretation does seem more plausible. To be charitable, I’m going to assume their contribution wasn’t in name only.

You have valid points. Often above levels of my sanity I get hey lets put fusion io cards to fix “code” issues. I appreciate at what they are trying to say but most of us who have had the opportunity to test drive their products know how outstanding they can be. My head was scratching on this white paper though as you pointed out enterprise array stats were actually good. I don’t think they painted the story well on this one personally but you did not call them out in a bad way just a reminder for perspective. To be fair FusionIO and Scalability experts some times they are fallable too so keep that in mind as we don’t have all the facts as you eluded to but did point out good observations.

[…] have the time before this session to really analyze the deck, although I’ve written about the wild inaccuracies of Fusion-io’s SQL 2014 tests before. Let’s just look at the takeaways from the first feature […]

Interesting read and I can say thank you very much as I am still a Jr DBA myself and I would be of the folks who would get fooled by improperly presented information as my magnification into the depths of sql is still low compared to seasoned veterans as I am still young and learning… though I already have my share of scars =)